The rise of Artificial Intelligence (AI) in cyber warfare has ushered in a new era of state-sponsored espionage, posing unprecedented global security risks. North Korea’s notorious hacking campaigns, aimed at stealing classified military information and fueling its banned nuclear program, exemplify how AI enhances state-sponsored cyber espionage. A specific North Korean hacking group, APT45, also known as Anadriel, has been linked to various cyberattacks, targeting defense manufacturers and engineering firms in the United States and South Korea. North Korea’s cyber operations have targeted critical sectors globally. In one case, Rim Jong Hyok, a military intelligence operative, was indicted for hacking into U.S. hospitals, NASA, and military bases, installing ransomware that disrupted healthcare services and encrypted sensitive data. The ransom was demanded in Bitcoin, which was laundered through a Chinese bank to fund further cyberattacks.

North Korean operatives have also exploited the rise of remote work by posing as IT workers using falsified identities. For example, “Kyle,” a North Korean agent, secured a remote job in the U.S. and had company laptops shipped to North Korea, giving him direct access to corporate networks.

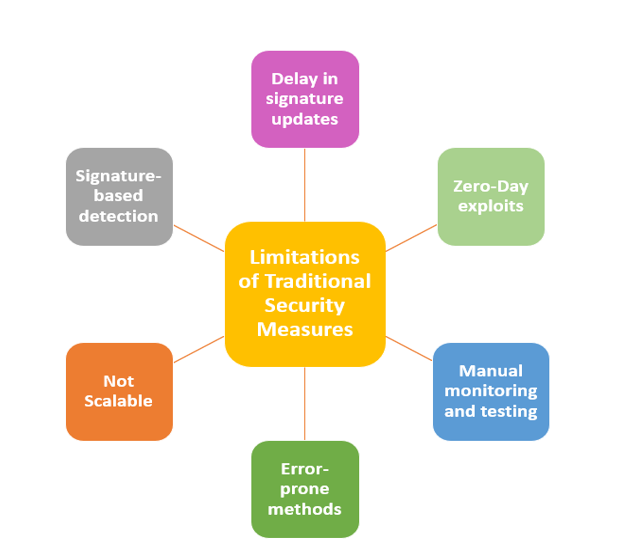

These activities highlight the use of AI-driven malware, which evolves rapidly, making traditional defense systems like firewalls and antivirus software ineffective against the adaptive nature of these threats.

North Korea’s cyber espionage is not unique, but its sophistication is amplified by AI’s ability to automate complex attacks. According to the United Nations Security Council’s March 2024 report, North Korea has stolen approximately three billion dollars’ worth of cryptocurrency between 2017 and 2023 to fund its nuclear weapons program. AI technologies, such as OpenAI’s large language models, have been used by North Korean hackers to automate phishing campaigns and identify targets more efficiently, further complicating cybersecurity efforts and making state-sponsored espionage harder to counter. By employing AI in the reconnaissance phase, hackers can analyze massive datasets to identify network vulnerabilities more efficiently than ever. This automation enables them to breach systems undetected and steal sensitive data, as seen in the high-profile theft of cryptocurrency to fund North Korea’s nuclear weapons program.

The AI-enhanced malware used in these campaigns can self-evolve, bypassing conventional defenses, thus underscoring the limitations of traditional cybersecurity strategies in the face of AI-driven threats. Cybersecurity strategies must evolve, requiring continuous monitoring and updates to include AI-specific defenses. Failing to do so risks opening security gaps, as current control systems may become outdated or incomplete without proper adjustments.

In response, some nations have recognized the growing necessity of integrating AI into their own cybersecurity strategies. South Korea, for example, revised its National Cybersecurity Strategy to incorporate AI-driven tools to detect and respond to cyber threats in real-time. Such adaptive measures allow for faster detection of anomalies and enable predictive threat intelligence, reducing the reaction time to cyber intrusions. Yet, as AI empowers state-sponsored espionage, it also raises significant ethical concerns. The line between effective cybersecurity and potential violations of privacy and civil liberties becomes increasingly blurred when vast amounts of data are processed by AI to detect threats.

AI has also enabled new forms of social engineering, making cyberattacks more targeted and persuasive. Cybercriminals can now craft more realistic phishing emails and deepfake videos that are nearly indistinguishable from legitimate communications. These attacks exploit human trust and can be difficult to detect without advanced AI-based defenses. The FBI has raised alarms about the increasing use of AI in phishing and social engineering, noting that AI can enhance the scale and accuracy of such attacks, leading to more successful intrusions. “As technology continues to evolve, so do cybercriminals’ tactics. Attackers are leveraging AI to craft highly convincing voice or video messages and emails to enable fraud schemes against individuals and businesses alike,” said FBI Special Agent in Charge Robert Tripp. “These sophisticated tactics can result in devastating financial losses, reputational damage, and compromise of sensitive data.” For instance, AI-powered voice and video cloning scams have tricked victims into divulging sensitive information by mimicking trusted individuals.

According to the 2024 Human Risk in Cybersecurity Report, more than half of U.S. workers worry their organization may be hit by a cyberattack, with 85% acknowledging that AI has made these attacks more sophisticated.

Younger generations, in particular, feel unequipped to handle potential threats, with many expressing fear that their own actions could inadvertently expose their employers to risk.

Despite the threats posed by AI-enhanced hacking, the technology itself offers some solutions. AI-powered cybersecurity tools are being developed to counter these evolving threats. By analyzing vast amounts of data in real-time, AI can detect and respond to malicious activity more effectively than traditional security measures. “AI plays a crucial role in enhancing cyber defenses, detecting and responding to threats more effectively by analyzing vast amounts of data in real-time and identifying malicious activity,” said Tehila, who also worked in the Israel Defense Forces, specializing in cybersecurity.

For example, AI’s ability to learn from patterns allows it to anticipate cyberattacks and react faster than human-operated systems. The use of AI in cybersecurity is already showing promising results, with organizations reporting significant reductions in the cost of data breaches thanks to AI-driven threat detection.

However, this arms race between cybercriminals and defenders presents a paradox: while AI offers powerful tools for defense, it simultaneously lowers the barrier for novice hackers. AI’s accessibility means that even less skilled individuals can carry out sophisticated cyberattacks, as pointed out by experts from the National Security Agency. In the near future, AI will almost certainly escalate the frequency and intensity of cyberattacks. The UK’s National Cyber Security Centre predicts that by 2025, AI will significantly enhance existing hacking tactics, allowing both state and non-state actors to conduct more sophisticated operations with greater ease.

This democratization of hacking capabilities exacerbates the cybersecurity threat landscape, as AI can be used to automate everything from reconnaissance to executing complex attacks, as seen in AI-enhanced ransomware campaigns.

The integration of AI into cyber espionage presents both significant risks and opportunities for global security. State-sponsored hackers have already harnessed AI to conduct highly sophisticated cyberattacks, targeting critical infrastructure and defense systems. While AI can enhance cybersecurity defenses, it also creates new ethical dilemmas and lowers the technical barriers for cybercriminals. As nations race to defend against AI-driven cyber threats, the global security landscape will inevitably become more complex and interconnected, requiring collaborative international efforts to mitigate the risks posed by AI-enhanced cyber espionage.