Mindy Nunez Duffourc*, Sara Gerke** & Konrad Kollnig***

Download a PDF version of this article here.

Introduction

Generative AI (“GenAI”) is a powerful tool in the content generation toolbox. Its modern debut via applications like ChatGPT and DALL-E enamored users with human-like renditions of text and images. As new user accounts grew exponentially, these models soon gained a foothold in various industries, from law to medicine to music. The GenAI honeymoon period ended when questions about GenAI development and content began to mount: Is this content reliable? Can GenAI harm consumers and others? What are the implications for intellectual property (IP) rights? Does GenAI violate data privacy laws? Is it ethical to use AI-generated content? Users have already faced the consequences of putting blind faith in GenAI. For example, a lawyer who relied on ChatGPT to perform legal research was sanctioned for including fictional cases in his court pleadings.1 Additionally, GenAI developers began to encounter scrutiny related to their development and marketing of GenAI tools. In Europe, Italy temporarily banned ChatGPT, citing concerns about data privacy violations.2 Recently, a non-profit organization focused on private enforcement of data protection laws in the European Union (EU) claimed that ChatGPT’s provision of inaccurate personal data about individuals violates data privacy.3 In the United States (US), lawsuits alleged violations of IP, privacy, and property rights resulting from developers’ use of massive amounts of data to train GenAI.4

The increasing development and use of GenAI has spurred data privacy concerns in both the EU and the US. According to the FTC, “[c]onsumers are voicing concerns about harms related to AI—and their concerns span the technology’s lifecycle, from how it’s built to how its [sic] applied in the real world.”5 While the introduction of new technologies has generally led to significant legislative retooling in the area of data privacy in the last decade—with the EU adopting the General Data Protection Regulation (“GDPR”), and several US states following suit—legislators designed these laws before GenAI models, like ChatGPT, were on the radar. In April 2023, the European Data Protection Board (EDPB) developed a ChatGPT taskforce to coordinate regulatory enforcement in the EU Member States “on the processing of personal data in the context of ChatGPT.”6

The use of personal data to develop and update GenAI models can harm individuals by disclosing personal data, including sensitive personal data, to a broad audience; enabling individual profiling for targeting, monitoring, and potential discrimination; producing false information; and limiting an individual’s ability to keep their personal data private.7 This article examines how the current approaches to data privacy in the US and EU govern personal data in the GenAI data lifecycle. We focus specifically on the flow of personal data in the GenAI data lifecycle because its collection and use have important data privacy implications for users.

Part I introduces GenAI models. It traces the development of GenAI architecture and provides a technical overview of modern GenAI. It discusses the capabilities and limitations of GenAI and provides a quick glimpse into the data sources that power these models.

Part II sets forth the current frameworks governing personal data in the US and EU. It discusses how these frameworks aim to protect personal data and provides the basic definitions for various types of data that have important implications in the GenAI data lifecycle. This part is supplemented by tables in the appendices that provide a summary comparison of the treatment of various types of data—including personal data, sensitive personal data, de-identified, pseudonymized, and anonymized data, and publicly available data—in US and EU regulatory frameworks.

Part III outlines the flow of personal data in the GenAI data lifecycle and its implications on data privacy. It describes the role of personal data in GenAI development and training, how GenAI developers might use personal data to improve existing GenAI models or develop new models, and how personal data can become part of a GenAI model’s output. Finally, it discusses the retention of personal data in the GenAI data lifecycle.

Part IV identifies data privacy implications that arise as personal data flows through the GenAI data lifecycle and analyzes how the current frameworks governing personal data might address these data privacy implications in the US and EU. First, it discusses the privacy implications and governance of using publicly available data in the GenAI data lifecycle. Second, it discusses the privacy implications and governance of using private and sensitive personal data in the GenAI data lifecycle. Finally, it discusses the loss of control over personal data in the GenAI data lifecycle.

I. Generative AI

GenAI describes AI models that create content like images, videos, sounds, and text. Even if the public release of the latest generation of GenAI models came as a surprise to many, the underlying technologies had been in development for decades.8 One strand of GenAI takes the form of large-language models (LLMs), like ChatGPT, that use sophisticated deep learning models for next-word prediction to generate human-like text.9 Next-word prediction is possible because words do not randomly follow each other in a text, but are context-dependent.10 In other words, if one knows what other words have been written so far in a piece of text, then it is possible, with relatively high accuracy, to build a “model” that predicts the following word.

Some of the earliest approaches for next-word prediction were n-gram models that date back to Claude Shannon’s revolutionary work on information theory in the 1940s.11 An n-gram model is a very simple language model. It looks at a fixed number of words and tries to guess what word is most likely to come next.12 For example, a 2-gram model (where n = 2) only looks at one word to predict the next one (i.e., a total of two words, hence “2-gram”).13 For example, if a 2-gram model is given the word “How” as context, it might guess that the next word is “are” because “How are” is a common pairing of words. Then, it can use “are” to guess the next word, maybe “you.” This, overall, gives the text “How are you.” By predicting the next word again and again, it is then possible for algorithms to write large amounts of text that may or may not look like it was written by a human, depending on the quality of the model. Like other language models, including LLMs, n-gram models are trained on large corpora of text to learn what word is—statistically speaking—most likely to come next.14

Modern-day LLMs also use next-word prediction for text generation but are significantly more sophisticated than n-gram models. Unlike n-grams, they are not limited to a fixed number of words but can, instead, reason over much larger inputs of text. To do so, they are made up of hundreds of billions of parameters, which are “mathematical relationship[s] linking words through numbers and algorithms.”15 To train such a large number of parameters, a large amount of training data is necessary (i.e., hundreds of gigabytes of data), as well as significant computational resources (i.e., millions of dollars of energy consumption and computing hardware). For example, OpenAI trained GPT-3 with the Common Crawl, WebText2, Books1, Books2, and English-language Wikipedia datasets.16 Google’s original LLM model, Bard, used Language Models for Dialog Applications (“LaMDA”), which was trained using 1.56 trillion words of publicly available text and dialogue on the internet.17 According to Meta, its LLM, Llama 2, is also trained with the enormous amount of publicly available text on the internet.18 By training LLMs on the vast text available on the internet and in books, they have become extremely good at mimicking previous human-generated work through next-word prediction. Yet, since these models are so dependent on previously existing text, they currently struggle with producing accurate textual outputs beyond their training data.19 Thus, LLMs are good at producing text that looks reliable, but since they have no semantic understanding of the text that they write, they have been deemed “stochastic parrots.”20

The models that underpin all state-of-the-art LLMs rely on the “Transformers”—or a closely related––architecture.21 This type of deep learning model architecture was invented by researchers at Google and first released in 2017.22 Transformers use “self-attention,” which simplified the model architecture compared to previous models and achieved cutting-edge performance on language tasks—including text generation.23

Other GenAI models also use deep learning models but create non-textual outputs like images, videos, and sounds—or even combine different such “modalities” (e.g., producing an image based on textual input). While these models rarely use the Transformers architecture, all GenAI models share many of the same challenges and limitations. For example, like LLMs, other GenAI models also need to be trained on vast amounts of data. Much of this data comes from information on the internet that is extracted or “scraped” by automated tools. Large-Scale Artificial Intelligence Open Network (“LAION”) provides a publicly available image data set that can be used to train GenAI models.24 Stable Diffusion’s AI model, which underlies popular AI image generation applications like Midjourney and Dreamstudio, was trained with 2.3 billion images scraped from the internet by the nonprofit organization Common Crawl.25 This dataset includes stock images, but also hundreds of thousands of images from individuals on social media and blogging platforms, like Pinterest, Tumblr, Flickr, and WordPress.26 OpenAI’s DALL-E, too, was trained using millions of images on the internet.27 Google trained its text-to-music GenAI model, MusicLM, with datasets that are primarily sourced from over 2 million YouTube clips containing not only music, but a variety of other human, animal, natural, and background sounds.28 Google replaced Bard with a new multi-modal model, Gemini, which is trained not only with text, but also with images, audio, and video.29

GenAI has wide-ranging uses, from designing magazine covers,30 to creating new music,31 to summarizing medical records.32 These uses present a wide range of legal issues that stem from their use of massive amounts of data for development and training. For example, some of this data, like copyrighted books, might implicate IP rights.33 Some of this data, like an individual’s biographical information or photograph, might implicate data privacy rights. We now turn our attention to the latter.

II. The Legal Framework Governing Personal Data in the US and EU

Data is the lifeblood of GenAI. Some of this data is “personal data,” which is the term we use to refer to data that relates to or can be used to identify an individual. A subset of personal data that reveals particularly sensitive information about individuals is often labeled “sensitive personal data.” Personal data can be de-identified, pseudonymized, or anonymized. De-identification or pseudonymization describes a process aimed at preventing the identification of individuals in the data set itself, though it is possible to re-identify individuals by combining de-identified or pseudonymized data with data keys or other datasets. On the other hand, anonymized data usually refers to data that cannot be re-identified. Finally, publicly available data usually describes data, including personal data, that is already accessible to the public either through public records or through an individual’s own publication.

A. Legal Framework Governing Personal Data in the US

US data privacy law is “a hodgepodge of various constitutional protections, federal and state statutes, torts, regulatory rules, and treaties.”34 Although there is no single piece of federal legislation that governs personal data, there are several sector-specific federal laws that may offer protection for certain types of personal data, including the Federal Trade Commission (FTC) Act, the Gramm-Leach-Bliley Act, the Children’s Online Privacy Protection Act (COPPA), the Family Educational Rights and Privacy Act (FERPA), and the Health Insurance Portability and Accountability Act (HIPAA).35 At the state level, several states have enacted general data privacy laws, including California and Virginia.36 Finally, state tort law might also govern the collection and use of some personal data through privacy- and property- related torts.

1. Federal Laws

Section 5 of the Federal Trade Commission (FTC) Act prohibits deceptive and unfair business practices.37 It gives the FTC legal authority to establish, monitor, and enforce rules concerning deceptive and unfair practices that harm consumers, which can include protecting consumers’ personal data.38 The FTC adopts a consumer-centric concept of personal data by focusing on data that can be “reasonably linked to a specific consumer, computer, or other device.”39 The FTC has indicated that genetic data, biometric data, precise location data, and data concerning health are sensitive categories of consumers’ personal data.40

In 2012, the FTC established a Privacy Framework to provide guidance to commercial entities that collect consumer data to help them avoid running afoul of the broad consumer protections that the FTC enforces through the FTC Act.41 In this framework, the FTC adopted a risk-based approach to data de-identification and considered data that cannot be reasonably linked to a consumer as being in a “de-identified form.”42 Notably, it recommended that companies take measures to reduce the risk of re-identification before considering the data truly de-identified.43 More recently, the FTC has expressed skepticism of claims that personal data is “anonymous,” noting that, “[o]ne set of researchers demonstrated that, in some instances, it was possible to uniquely identify 95% of a dataset of 1.5 million individuals using four location points with timestamps.”44

Because the FTC focuses generally on deceptive and misleading practices that harm consumers under the FTC Act, there is no exclusion for such practices that involve the use of publicly available data. In the past, the FTC has expressed concerns about the collection of publicly available data by individual reference services (IRSs)—services that provide access to databases with publicly-available data about individuals. In a 1997 report to Congress, the FTC embraced a self-regulatory approach relying on principles developed by the now-defunct IRS industry group to limit access to “non-public information,” which the FTC report defined as “information about an individual that is of a private nature and neither available to the general public nor obtained from a public record.”45 Now, publicly available data collected, aggregated, and sold by IRSs can serve as a valuable source of personal data in the GenAI lifecycle.

The Gramm-Leach-Bliley Act regulates consumer data provided in connection with obtaining financial services.46 The FTC exercises its legal authority to protect the privacy of financial data under the Gramm-Leach-Bliley Act through the Financial Privacy Rule (FPR).47 Under the FPR, “[p]ersonally identifiable financial information” includes information about a consumer obtained in connection with the provision of financial services and products that can be used to identify an individual consumer.48 On the other hand, the FPR does not govern “[i]nformation that does not identify a consumer” as “[p]ersonally identifiable financial information.”49 It lists “aggregate information” as an example of information that will not be governed as “personally identifiable financial information.”50 The FPR does not distinguish a separate category of “sensitive” personal information.51 The Rule only governs “nonpublic personal information,”52 and does not regulate most “publicly available information,” described as “information that you have a reasonable basis to believe is lawfully made available to the general public.”53

The FPR takes two main approaches to protecting the privacy of nonpublic personal information. First, it requires financial institutions to provide information about their privacy policies and disclosure practices.54 Generally, this information should be contained in a privacy notice and include a description of nonpublic personal information that is collected and disclosed, the recipients of this information, information about the ability to opt out of certain third-party disclosures, and an explanation about how information security and confidentiality are protected.55 Second, it limits disclosures of nonpublic personal information and requires financial institutions to provide individuals with an opportunity to “opt out” of certain disclosures of their nonpublic personal information.56 Generally, the FPR allows disclosure if the required information has been provided in a privacy notice and if, after a reasonable period of time, the individual has not opted out of the disclosure.57 However, disclosures are allowed without providing an opt-out if they are made for the purpose of having a third party perform services on the company’s behalf if the company’s use of the information is limited and the individual received an initial privacy notice.58 Disclosures are allowed without providing either a privacy notice or opt-out if they are made (1) for the purpose of processing transactions requested by the individual, (2) with the individual’s consent, (3) to protect confidentiality and prevent fraud, (4) in connection with compliance with industry standards, or (5) as required by law.59

COPPA protects the personal information of children under the age of 13.60 The FTC implements COPPA protections through the Children’s Online Privacy Protection Rule (COPPR).61 The COPPR defines “personal information” as “individually identifiable information about an individual collected online.”62 The rule does not distinguish a separate category of “sensitive” personal data.63 Under COPPA, de-identified data may not fall under the definition of regulated “personal information” if it does not include identifiers or trackers such as internet protocol addresses or cookies.64 COPPR governs only personal information when it is collected from a child online, but this could also include a subset of publicly available personal data.65

Under COPPR, online operators cannot collect and use personal information from children without first obtaining “verifiable parental consent.”66 It also requires operators of online services to disclose information about their collection and use of personal information obtained from children and take measures to protect the confidentiality and security of such information.67 COPPR gives parents the right to review their children’s personal information and withdraw consent for any further use of such information.68 However, parental consent is not required when the operator collects only necessary cookies, when information is provided for the purpose of obtaining consent, or in some cases when information is used for the limited purposes of protecting the safety of a child, responding to a specific and direct request from a child, protecting website security, or complying with legal obligations.69 Finally, COPPR requires the deletion of personal information once it is no longer “reasonably necessary to fulfill the purpose for which the information was collected.”70

The Family Educational Rights and Privacy Act (FERPA), which is administered and enforced by the US Department of Education (DOE), provides privacy protections for educational records, which include all information directly relating to a student that is maintained by an educational institution.71 Under FERPA, “[p]ersonally identifiable information” includes information such as names, addresses, identification numbers, date and place of birth, as well as “[o]ther information that, alone or in combination, is linked or linkable to a specific student that would allow a reasonable person in the school community, who does not have personal knowledge of the relevant circumstances, to identify the student with reasonable certainty.”72 Like COPPA and the Gramm-Leach-Bliley Act, FERPA does not distinguish a separate category of sensitive data.73 FERPA allows the non-consensual disclosure of de-identified records and information, which it describes as “the removal of all personally identifiable information provided that the educational agency or institution or other party has made a reasonable determination that a student’s identity is not personally identifiable, whether through single or multiple releases, and taking into account other reasonably available information.”74 The DOE recognizes data aggregation as a potential method for de-identifying educational data under FERPA.75 FERPA protects personal data in education records regardless of whether the data is otherwise publicly available.76

FERPA prohibits disclosure of most personally identifiable information in educational records to parties outside of the educational institution unless the student (or parent for students under 18) have provided prior written consent or the disclosure meets one of the enumerated exceptions (i.e., disclosure is required to comply with law or standard, to protect the student, etc.).77 To be valid, this consent must identify the specific records being disclosed, the purpose of the disclosure, and party or parties receiving the disclosure.78 However, FERPA does not limit the disclosure of “directory information,” which includes “the student’s name, address, telephone listing, date and place of birth, major field of study, participation in officially recognized activities and sports, weight and height of members of athletic teams, dates of attendance, degrees and awards received, and the most recent previous educational agency or institution attended by the student.”79 Finally, FERPA provides students (or parents) with rights to access educational records and request amendments, including correction and deletion, of records if they are “inaccurate, misleading, or in violation of their rights of privacy.”80

The Health Insurance Portability and Accountability Act (HIPAA)81 governs a subset of personal data known as “[p]rotected health information” (PHI), which comprises, among other things, “[i]ndividually identifiable health information” that is created, used, or disclosed by so-called “[c]overed entit[ies]” in the course of providing healthcare services.82 “Individually identifiable health information” describes health information that identifies or can be reasonably used to identify an individual.83 The US Department of Health and Human Services (HHS) regulates PHI privacy through HIPAA’s Privacy Rule.84 The Privacy Rule governs the use and disclosure of patients’ PHI by a limited category of “covered entities,” which generally includes healthcare providers, insurers, clearinghouses, and the “business associates” of covered entities.85 Health data is not regulated as PHI under HIPAA’s Privacy Rule if it “does not identify an individual and with respect to which there is no reasonable basis to believe that the information can be used to identify an individual.”86 PHI can be de-identified either through an expert determination that the re-identification risk is “very small” or by removing 18 specific identifiers.87 De-identification can be accomplished using data aggregation, usually in combination with other de-identification techniques.88 HIPAA does not exempt publicly available personal information, which would still be considered PHI if it is created or maintained by a covered entity and sufficiently relates to a patient’s medical treatment.89

HIPAA prohibits covered entities from using or disclosing PHI except in the following circumstances: (1) disclosure to the individual concerned, (2) for healthcare-related purposes, (3) pursuant to an authorization from the individual concerned or their representative, (4) to maintain directory information or for notification purposes after allowing the concerned individual an opportunity to object, (5) in emergency situations, (6) as required by law or public interest, or (7) when a limited data set that excludes certain direct identifiers is used for research, public health or health care operations.90 For an authorization to be valid, it must provide information about the specific PHI disclosed and the person receiving the PHI and contain an expiration date.91 Additionally, HIPAA sets forth the “minimum necessary” standard, which generally requires covered entities to limit their use and disclosure of PHI to what is necessary to accomplish a particular purpose.92 Finally, HIPAA provides individuals with the right to review their PHI and the right to request amendments to inaccurate or incomplete PHI.93

2. State Laws

At the State level, California was the first state to pass a comprehensive data privacy law in 2018 (effective since January 2020),94 followed by Virginia in 2021 (effective since January 2023).95 Meanwhile, several other states have passed broad privacy laws, all of which have already become effective or will become effective in the next few years.96 In this article, we focus on the regulation of personal data in California and Virginia.

In California, the California Consumer Privacy Act (CCPA), recently amended by the California Privacy Rights Act (CPRA),97 uses the term “personal information,” which is defined as “information that identifies, relates to, describes, is reasonably capable of being associated with, or could reasonably be linked, directly or indirectly, with a particular consumer or household.”98 Virginia’s Consumer Data Protection Act (VCDPA) defines “personal data” as “any information that is linked or reasonably associated to an identified or identifiable natural person.”99 Both the CCPA and the VCDPA recognize a subcategory of sensitive personal data. The CCPA uses the term “sensitive personal information,” which includes a consumer’s government identification numbers, financial account information, geolocation data, email and text message content, genetic and biometric data used to identify an individual, and data concerning race, religion, ethnicity, philosophical beliefs, union membership, health, sexual orientation, and sex life.100 Under the VCDPA, “sensitive data” is personal data that reveals an individual’s race, ethnicity, religion, medical diagnoses, sexual orientation, citizenship, immigration status, personal data of a child, geolocation data, and genetic or biometric data processed for the purpose of identifying an individual.101

The CCPA and VCDPA both generally describe de-identified data as information that cannot be reasonably linked to an individual consumer.102 In these cases, de-identified data is not regulated as personal data as long as companies take reasonable measures to ensure that the data is properly de-identified and reduce the risk of re-identification.103 Because the CCPA and the VCDPA do not govern de-identified data (albeit with a relatively narrow definition of such data), the more stringent category of anonymous data also falls outside of their scope. Regarding data aggregation, the CCPA explicitly excludes “aggregated consumer information,” which it defines as “information that relates to a group or category of consumers, from which individual consumer identities have been removed, that is not linked or reasonably linkable to any consumer or household, including via a device.”104 While the VCDPA does not expressly exempt aggregate data from its scope, aggregate data that can no longer be linked to an individual will likely fall outside of the definition of “personal data” under the act.105

Neither the CCPA nor the VCDPA govern publicly available data. The CCPA excludes “publicly available information,” which includes “information made available by a person to whom the consumer has disclosed the information if the consumer has not restricted the information to a specific audience.”106 Similarly, the VCDPA excludes “publicly available information,” which it defines as

[I]nformation that is lawfully made available through federal, state, or local government records, or information that a business has a reasonable basis to believe is lawfully made available to the general public through widely distributed media, by the consumer, or by a person to whom the consumer has disclosed the information, unless the consumer has restricted the information to a specific audience.107

Unlike the VCDPA, the CCPA does not consider “biometric information collected by a business about a consumer without the consumer’s knowledge” to be publicly available.108

The CCPA and VCDPA impose several obligations on businesses that collect and/or use consumers’ personal data. Prior to or at the time of collection, businesses must provide consumers with information about the types of personal data collected, the purposes of the collection, and information about sharing data with third parties.109 Unlike the CCPA, which only requires notification, the VCDPA requires consent prior to the collection or use of sensitive personal data.110 Additionally, under both regimes, the collection and use of consumers’ personal data must be “reasonably necessary and proportionate” to accomplish the stated purpose or compatible purposes.111 Under both laws, consumers have the right to opt-out of the sale, sharing, or further disclosure of their personal information,112 request deletion of personal information,113 and request the amendment of inaccurate personal information.114

In addition to public laws that regulate the collection and use of personal data, private law might also govern personal data when it intersects with certain privacy interests. An individual has an interest in the privacy of their personal data, which includes a right to “control of information concerning his or her person.”115 A violation of such interest may give rise to tort claims for invasion of privacy, disclosure of private information, or intrusion upon seclusion if an individual suffers a legally cognizable harm.116 However, not all personal data implicate protected privacy interests. An individual must first have a reasonable expectation of privacy in the type of personal data that is obtained or disclosed.117 Absent this, the individual will not suffer the type of harm that is sufficient to confer standing.118 To determine whether users have a protected privacy interest in their personal data, it is relevant to consider “whether the data itself is sensitive and whether the manner it was collected … violates social norms.”119 Using these considerations, the Ninth Circuit agreed that Facebook users had a reasonable expectation of privacy in the “enormous amount of individualized data” that Facebook secretly obtained by using cookies to track the browsing activity of users who were logged out of the platform.120 On the other hand, the District Court for the Western District of Washington noted that “[d]ata and information that has been found insufficiently personal includes mouse movements, clicks, keystrokes, keywords, URLs of web pages visited, product preferences, interactions on a website, search words typed into a search bar, user/device identifiers, anonymized data, product selections added to a shopping cart, and website browsing activities.”121

B. The GDPR Framework Governing Personal Data in the EU

In the EU, the collection, use, and retention of personal data is generally prohibited unless allowed by law. The General Data Protection Regulation (GDPR), effective since May 18, 2018, is an EU Regulation that directly governs the processing of personal data in all 27 EU Member States.122 In general, the GDPR governs entities that process personal data of individual “data subjects.”123 These entities are regulated as “Controllers” and/or “Processors” depending on what they do with the personal data. Controllers “determine[] the purposes and means of the processing of personal data,” while processors “processes personal data on behalf of the controller.”124 Additionally, the GDPR is said to have an extraterritorial scope because, under certain conditions, it can govern controllers and processors outside of the EU who process personal data of a data subject located in the EU.125

Data “processing” means “any operation or set of operations which is performed on personal data,” and includes, for example, collection, using, storing, de-identifying, transferring, and deleting personal data.”126 Under the GDPR, personal data can include any information that identifies a natural person or can be used to identify a natural person (directly or indirectly), including “a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.”127 Notably, the GDPR does not exclude publicly available data from its scope.128 “Special categories of data” (or sensitive personal data), under the GDPR include “personal data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership, and the processing of genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person’s sex life or sexual orientation.”129

The EU GDPR does not recognize a category of “de-identified data.” Instead, it refers to personal data that has undergone “pseudonymization,” which is

the processing of personal data in such a manner that the personal data can no longer be attributed to a specific data subject without the use of additional information, provided that such additional information is kept separately and is subject to technical and organisational measures to ensure that the personal data are not attributed to an identified or identifiable natural person.130

Notably, the GDPR does not exempt pseudonymized data from its scope, but instead views pseudonymization as a method for protecting personal data.131 Conversely, the GDPR does not govern anonymous data, which it describes as, “information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable.”132 However, there is conflicting regulatory guidance in the EU about how to interpret the anonymization standard.133 Some regulatory authorities take an absolutist approach that considers data anonymous only when it is impossible to re-identify the data, while others adopt a risk-based approach that considers data anonymous when there is no reasonable chance of re-identification.134 Since absolute anonymization may be “statistically impossible,” EU regulatory authorities have tended to adopt a risk-based approach to anonymization.135 The GDPR will also not apply to fully “aggregated and anonymised datasets” when the “original input data … [is] destroyed, and only the final, aggregated statistical data is kept.”136

The GDPR protects personal data through its principles of lawfulness, fairness, transparency, purpose limitation, data minimization, accuracy, storage limitation, integrity, confidentiality, and accountability.137 To lawfully process personal data under the GDPR, there must be a lawful basis to support data processing.138 Under Article 6, there are six legal justifications upon which personal data processing can be based: (1) consent, (2) contract, (3) legal obligation, (4) vital interests of natural person, (5) public interest or official authority, and (6) legitimate interests of controller or third party.139 Processing special categories of personal data, including biometric data, data concerning health, data revealing racial or ethnic origins, and data potentially revealing sexual orientation, political opinions, religious, or philosophical beliefs, is generally prohibited and only allowed under certain conditions laid out in Article 9, such as obtaining “explicit” consent of the natural person.140

Consent can only be a valid legal basis for processing personal data if it is (1) freely given, (2) specific, (3) informed, (4) unambiguous, and (5) as easy to give as to withdraw.141 For consent to be freely given, users must be able to refuse consent without suffering “significant negative consequences.”142 For consent to be specific, the user must provide consent for a specific purpose.143 According to the EDPB (previously the Article 29 Data Protection Working Party, which coordinates GDPR enforcement), neither blanket consent “for all the legitimate purposes” nor consent based on “an open-ended set of processing activities” are valid.144 For consent to be informed, the user must have the following information: (1) the identity of the controller(s), (2) the purpose for collecting and further processing the data, (3) the category of data collected, (4) the right to withdraw consent, (5) the existence of automated decision making (if any), and (6) the risks and safeguards associated with transferring data to third countries.145 For consent to be unambiguous, the user should provide consent via an “affirmative action.”146 A clear affirmative action in the digital sphere can include sending an email, submitting an online form, using an electronic signature, or ticking a box.147 Consent based on a user’s silence or inaction will not be valid.148

At the time of personal data collection, the GDPR also requires that data subjects be informed about the existence and purposes of the processing, which includes providing information about the “specific circumstances and context in which the personal data are processed.”149 This information should be in clear, plain, and easily understandable language.150 Once collected, the GDPR requires personal data to be stored in a manner that is sufficient to protect it “against unauthorised or unlawful processing and against accidental loss, destruction or damage.”151 This requires the implementation of technical and organizational measures to protect personal data.152 These measures should include pseudonymization and encryption of personal data and the implementation of measures that ensure confidentiality and integrity of processing systems as well as the ability to restore data if necessary.153 Personal data should not be stored for any longer than needed to accomplish the purposes for which it is being processed.154 However, data can be stored for a longer period “solely for archiving purposes in the public interest, scientific or historical research purposes or statistical purposes” as long as it is sufficiently protected.155

Finally, data subjects have a right, among other things, to make requests to withdraw consent,156 access,157 rectify,158 erase,159 transfer,160 restrict161 or object162 to the processing of their personal data. The GDPR also gives individuals who suffer “material or non-material damage” as a result of a GDPR “infringement” the “right to receive compensation” and holds data controllers (and potentially even processors) “liable for the damage caused by processing which infringes this Regulation.”163 As a result, not only does the GDPR regulate data privacy in public law, it also provides a private right of action for individuals who are harmed by violations of the GDPR.

III. The Flow of Personal Data Flow in the GenAI Data Lifecycle

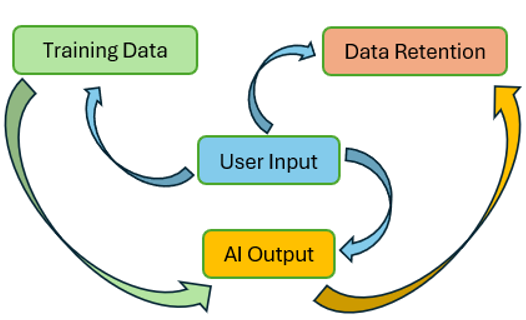

Personal data flows through the GenAI data lifecycle in various stages. In the development phase, personal data can be used to train the model. Once the GenAI model is released, users can provide more personal data while signing up and using the model. Next, the GenAI models themselves produce data in the form of outputs provided to users, which can also include personal data. Finally, developers can retain personal data that users input or that the AI models output to improve GenAI models or develop new models.

A. Training Data

Creators of GenAI use large datasets, sourced primarily from publicly available information on the internet, to train models.164 This data can be scraped from public social media profiles (e.g., LinkedIn), online discussions (e.g., Reddit), photo sharing sites (e.g., Flickr), blogs (e.g., WordPress), news media (e.g., arrest reports), information and research sites (e.g., Wikipedia), and even government records (e.g., voter registration records).165 Paywalls are not always effective at protecting online data from web scrapers, and pirated data, like illegal copies of books, can end up in a scraped data set.166

Personal data is no exception. For example, Meta admits that personal information like a blog post author’s name and contact information may be collected to train its GenAI models.167 Even sensitive personal data can be scraped to train GenAI models. Photos from a patient’s medical record were included in LAION, a publicly available data set used to train some GenAI image models.168

B. User Input of Data

Users of GenAI applications provide various data to the companies that control and deploy the AI models, including specific user account data, user behaviors, and more general data that users input to facilitate the generation of new content by GenAI. It is no surprise that many companies collect personal data from account holders (e.g., name, age, contact information, and billing details) to provide information and services. Perhaps less obvious to users, but still considered routine, is the collection of user data for website analytics, which provide companies with insight into how users interact with their websites and applications.169 Finally, developers can also collect personal data that users enter into various online applications, including personal data that users provide to prompt AI content generation. This is a broad category of data that can range from details about a private business deal for contracts, to information about students for letters of recommendation, to a patient’s medical history for a referral. Users might also provide voice and image data to GenAI models that create art or music.

Developers might use user-provided personal data to develop or improve GenAI models.170 According to OpenAI’s Privacy Policy, it may use data that users provide, including user input of data to ChatGPT and DALL-E, for the development, training, and improvement of its GenAI models.171 Google Cloud Services claims that it does not train its AI models with data provided by its Cloud users without permission.172 However, Google’s Privacy Policy states that it “collect[s] the content you create, upload, or receive from others when using our services … includ[ing] things like email you write and receive, photos and videos you save, docs and spreadsheets you create, and comments you make on YouTube videos.”173 Elsewhere, the same policy states generally that Google “use[s] the information [they] collect in existing services to help [them] develop new ones” and that it “uses information to improve [their] services and to develop new products, features and technologies that benefit [their] users and the public,” which can include “us[ing] publicly available information to help train Google’s AI models.”174 Meta’s Privacy Policy describes how it uses both the content provided by users and information about user activity to develop, improve, and test its products, which can include GenAI products.175 This can include a Facebook user’s posts, messages, voice, camera roll images and videos, hashtags, likes, and purchases.176 Additionally, Meta’s use of users’ AI prompts, “which could include text, documents, images, or recordings,” are also governed by broad use provisions in the Meta Privacy Policy.177

User-provided content might also be shared with third parties. OpenAI’s privacy policy states that it may share personal information with third parties, including vendors, service providers, and affiliates.178 OpenAI sets almost no limitations on its use of anonymous, aggregated, and de-identified data.179 Google claims that it does not share personal information with third parties except in the following circumstances: (1) the user consents, (2) in the case of organizational use of Google by schools or companies, (3) for external processing, or (4) when sharing is required or permitted by law.180 Google states, however, that it “may share non-personally identifiable information publicly and with [their] partners—like publishers, advertisers, developers, or rights holders.”181 According to Meta, “[b]y using AIs, you are instructing [them] to share your information with third parties when it may provide you with more relevant or useful responses.”182 This includes personal information about the user or third parties.183 Meta’s Privacy Policy articulates the broadest data sharing practices to include sharing all user data, including personal data, with partners, vendors, service providers, external researchers, and other third parties.184 Meta advises users “not [to] share information that you don’t want the AIs to retain and use.”185

C. AI-Generated Output of Data

The output that GenAI models produce in response to user prompts is another source of data in the GenAI data life cycle. These outputs can include personal data that the model learned either through training or user-provided data. Despite efforts to prevent GenAI models from leaking personal data memorized in the model parameters during model training,186 researchers were able to extract personal data, including names, phone numbers, and email addresses, from GPT-2.187 In a later study, researchers extracted data from both open models—those with publicly available training data sets, algorithms, and parameters—and semi-open models—those with only publicly available parameters.188 They were “able to extract over 10,000 unique verbatim memorized training examples” from ChatGPT 3.5.189 These samples included personal data such as “phone numbers, email addresses, and physical addresses (e.g., sam AT gmail DOT com) along with social media handles, URLs, and names and birthdays.”190 Over eighty-five percent of this information was the personal data of real individuals, rather than hallucinated.191 This research cautioned that attackers with more resources could likely gather up to 10 times more training data from ChatGPT.192 GenAI developers disclose that outputs may be inaccurate but provide little information about the risk of the model disclosing a user’s personal data.193 OpenAI gives users the option to submit a correction request in cases where the model provides inaccurate information about a user; however, this does nothing to cure GenAI output of accurate personal data.194

D. Data Retention

Once personal data is collected by companies for developing and improving GenAI models, it may be retained for further use. OpenAI claims that ChatGPT does not store its training data.195 However, it states that it stores users’ personal information, which includes user input, “as long as we need in order to provide our Service to you, or for other legitimate business purposes such as resolving disputes, safety and security reasons, or complying with our legal obligations.”196 This is presumably for as long as a user has an active account.197 Although OpenAI provides users with an option to request that their personal data no longer be processed, removal of personal information from OpenAI’s applications is not guaranteed.198 For example, OpenAI notes that it will consider requests “balancing privacy and data protection rights with public interests like access to information, in accordance with applicable law.”199

Meta also allows users to submit a request to obtain, delete, or restrict Meta’s use of their personal information to train its AI models.200 Notably, this request only relates to personal data that Meta obtains from third parties and not data that it obtains directly from the user.201 To limit the use of personal data that users provide to Meta directly for any purpose, including training GenAI models like Llama 2, users are instructed to delete their Meta accounts (Facebook and Instagram) or to exercise their rights under data protection laws.202

Figure A illustrates the flow of personal data in the GenAI data lifecycle.

IV. The Protection of Personal Data in the GenAI Data Lifecycle in the US and EU

As demonstrated above, the flow of personal data through the GenAI data lifecycle is complex, and it can be difficult for users to keep track of how GenAI developers and third parties might access and use their personal data. As a result, several data privacy implications arise in connection with the use of publicly available, private, and sensitive personal data in the GenAI data lifecycle in both the US and EU. Additionally, individuals may also lose control over the accuracy and retention of their personal data.

A. Publicly Available Personal Data

GenAI training datasets include troves of publicly available personal data scraped from the internet. Third parties can examine these datasets to extract personal data in ways that can threaten individuals’ privacy. For example, researchers analyzing the open access German-language LAION dataset were able to determine both the identity of a man in a naked photograph and the exact address where a baby’s photograph was taken.203 Additionally, GenAI models themselves might disclose personal data to third parties because they “memorize portions of the data on which they are trained; as a result, the model can inadvertently leak memorized information in its output.”204

One view is that individuals should expect that any data they share publicly online is up for grabs and not subject to any privacy protections.205 On the other hand, the reproduction of personal data that individuals provide online could still violate reasonable expectations of data privacy. An individual might decide to disclose personal data in a specific public online setting for a specific purpose without any expectation that the same data will be used to train GenAI models. In fact, many users who originally provided personal data online could not have reasonably expected that future technology would be used by companies that did not yet exist to collect, use, profit from, and potentially publish their personal data far outside of the parameters in which it was originally shared.206 For example, a Reddit user might share personal data in a city-based group about depression for the purpose of obtaining mental health support, but the same user would likely not expect this information to become available to a virtually unlimited audience as part of a GenAI training data set. Additionally, the context and privacy implications of disclosing personal data may change over time.207 For example, imagine that the same user also disclosed to the online support group that her mental health condition was related to a legal abortion, and months later, that same abortion procedure is deemed illegal.

In the US, the FTC expressed early concerns about the IRS industry’s collection and use of publicly available personal data, noting that “advances in computer technology have made it possible for more detailed identifying information to be aggregated and accessed more easily and cheaply than ever before.”208 It worried that consumers would be “adversely affected by a perceived privacy invasion, the misuse of accurate information, or the reliance on inaccurate information” and noted the “potential harm that could stem from access to and exploitation of sensitive information in public records and publicly available information.”209 Unfortunately, it appears that the FTC’s interest in this topic seems to have waned considering the current absence of regulatory efforts focused on protecting consumers from harm that may stem from access, aggregation, and use of publicly available personal data. The US federal laws that govern subsets of personal data also offer little protection from broad disclosure of publicly available personal data. The Gramm-Leach-Bliley Act does not regulate personal information that is lawfully made public.210 While COPPA, FERPA, and HIPAA do not exclude publicly available information from regulation, these laws offer only limited and fragmented protection for certain subcategories of personal data that are collected and used under certain circumstances and would likely not protect publicly available personal data from data scraping practices or from being accessed in the resulting datasets.211

The exclusion of publicly available information from general state data privacy laws like the CCPA and VCDPA leaves open questions about whether all personal data scraped from the web is considered “publicly available.”212 Notably, the CPRA’s amendments to the CCPA broadened the scope of what is considered “publicly available” to include information in government records that is used for a purpose other than that for which it was originally collected.213 The drafters worried that limiting the use of information in public records would infringe upon constitutionally protected free speech.214 However, personal data disclosed online might not be considered “publicly available” if the individual restricted their self-publication to a specific audience. Similarly, if a third party publishes personal data about an individual outside of the original audience restrictions, this data may not be considered publicly available. Theoretically, data scraping should not collect self-published personal data that is not available to the “general public” because of audience restrictions; however, it can be difficult to determine whether personal data published to the general public by a third party was originally restricted to a specific audience. For example, individuals, companies, or government organizations might make the personal data of another individual publicly available, and the collection of this data to train GenAI might violate the data subject’s privacy.

Unlike US privacy laws, the GDPR does not exclude publicly available data from its scope, and processing of such data requires a valid legal basis.215 Consent, contract, and/or legitimate interests are the most likely legal bases for processing personal data in the GenAI data lifecycle.216 However, because scraping publicly available personal data generally occurs without knowledge of or contact with the data subjects concerned, advisory committees have noted that there should be sufficient legitimate interests to support the processing of personal data through data scraping.217 However, the public availability of data can increase the supporting legitimate interests of a processor, “if the publication was carried out with a reasonable expectation of further use of the data for certain purposes.”218 On the other hand, the broad collection and use of publicly available data implicates a wide range of privacy concerns for a large number of data subjects who are likely not aware that their personal data will be used for training GenAI models.219 The EDPB ChatGPT taskforce notes that when balancing the data controller’s legitimate interests with data subjects’ fundamental rights, “reasonable expectations of data subjects should be taken into account.”220 However, it also indicates that data controllers who employ technical measures to (1) exclude certain categories of data from data scraping and (2) delete or anonymize data that has been scraped are more likely to succeed in claiming legitimate interests as a valid legal basis for processing training data.221

Scraping publicly available data for training GenAI can be particularly intrusive when the data can be used to make predictions about an individual’s behavior.222 In Europe, the French, Italian, and Greek national data protection authorities (“DPAs”), charged with national enforcement of the GDPR, imposed fines on US-based Clearview AI for GDPR violations stemming from the company’s data scraping practices.223 Specifically, the DPAs found that Clearview AI’s scraping of over 10 billion publicly available facial images and associated metadata for the purpose of further processing those images to create a facial recognition database violated the GDPR’s requirements of lawfulness, fairness, and transparency in personal data processing.224 The DPAs rejected the company’s alleged legitimate interests in making a business profit as a valid legal basis for their data processing and ordered the erasure of such data, banned further collection, and fined the company 20 million euros each.225 In assessing the balance between the Clearview AI’s economic interest and data subjects’ fundamental rights, the DPAs highlighted that (1) the biometric data produced from the processing of these images was particularly intrusive and concerns a large number of people, and (2) the data subjects were not aware and could not have reasonably expected that their photographs and the associated metadata would be used to develop facial recognition software when they consented to the original publication of their photographs.226

Although, as demonstrated by the Clearview AI case, the general framework of the GDPR is already flexible enough to govern the large-scale processing of publicly available personal data, the EU also appears focused on protecting data subjects from illegal use of publicly available personal data for the specific purpose of training GenAI models. In addition to the EDPB’s ChatGPT taskforce, the Confederation of European Data Protection Organizations (CEDPO), which seeks to harmonize data protection practices in the EU member states, developed its own taskforce to address GDPR compliance during the processing phase of the GenAI data lifecycle.227 On the national level, the French data protection authority issued an action plan that will consider, among other issues, “the protection of publicly available data on the web against the use of scraping, or scraping, of data for the design of tools.”228 Notably, the EU is not ignoring the potential benefits of GenAI or attempting to regulate these models out of existence; instead, the CEDPO recognizes that, “[t]here will be no future without generative AI, and with data playing such a pivotal role in the training and operating of these systems, DPOs will play a central role in ensuring that both data protection and data governance standards are at the heart of these technologies.”229

Finally, although data scraping is intended to collect only data that is legally available to the general public, it is also important to recognize that this practice can amplify existing data privacy violations resulting from the unauthorized disclosure of sensitive personal data online. For example, an outdated data protocol for electronic medical record storage resulted in the unauthorized online disclosure of more than 43 million health records, which included patients’ names, genders, addresses, phone numbers, social security numbers, and details from medical examinations.230 This sensitive personal data might be collected in a data scrape, used to train GenAI models, and end up in the hands of third parties, even though it should not have been publicly available to begin with.

B. Private and Sensitive Personal Data

As a source of personal data in the GenAI data lifecycle, individual users can supply private (i.e. not publicly available) and sensitive personal data about themselves or others while using services and software provided by GenAI companies, including the GenAI applications themselves. In fact, the conversational nature of GenAI applications can cause users to let their guard down and overshare personal data.231 The GenAI model might then use this personal data to infer more personal data—even sensitive personal data—about an individual.232 The EDPB ChatGPT taskforce notes that despite policies that warn users to refrain from providing personal data to ChatGPT, “it should be assumed that individuals will sooner or later input personal data,” and that this data must still be processed lawfully.233 Additionally, geolocation services and wearable technology might also provide troves of sensitive personal data. While users might agree to share location data to use Google Maps and Uber, they may not realize that this data can “reveal a lot about people, including where we work, sleep, socialize, worship, and seek medical treatment.”234 Additionally, other technologies, like smartwatches and apps that monitor blood sugar or menstrual cycles, collect sensitive personal data from users. Once private and sensitive personal data are in the digital marketplace, they can become part of the datasets used to train GenAI models and available to third parties. The “unexpected revelation of previously private information … to unauthorized third parties” can be harmful, particularly when this personal data is later used for discriminatory purposes.235

In the US, the FTC has expressed some concern about whether the companies that develop LLMs are “engaged in unfair or deceptive privacy or data security practices,” particularly when sensitive data is involved.236 The FTC considers itself uniquely positioned to address consumer concerns about unfair or deceptive practices involving personal data collection and use in digital markets because it considers interests in both consumer protection and competition.237 This includes protecting consumer’s data privacy and ensuring that businesses do not gain an unfair competitive advantage as a result of illegal data practices.238 For example, after a photo and video storage company used data uploaded by consumers for GenAI development without users’ consent, the FTC, relying on its authority under the FTC Act, ordered the platform to obtain user consent for using biometric data from videos and photos stored on the platform and to delete any algorithms that were trained on biometric data without explicit user consent.239 The FTC has also taken legal action against companies that obtained, shared, sold, or failed to protect consumers’ sensitive data, including location data for places of worship and medical offices, health information, and messages from incarcerated individuals.240 On the other hand, the FTC’s ability to protect personal data is limited by the scope of the FTC Act, which only prohibits data practices that are either deceptive or unfair from the perspective of a reasonable consumer.241 The FTC has urged Congress to enact more general data protection laws, and some FTC Commissioners have called specifically for Congress to “take reasonable steps … to ensure that the consumer data they obtain was procured by the original source … with notice and choice, including express affirmative consent for sensitive data.”242

Sector-specific federal laws offer limited upstream protection for certain subcategories of personal data that may end up in the GenAI data lifecycle. The Gramm-Leach-Bliley Act’s disclosure and opt-out requirements can provide individuals with notice and some control over how their nonpublic personal information is collected and used by financial institutions, including the sharing of such data with third parties for GenAI development, but does not require explicit consent.243 COPPR and FERPA likely both require consent prior to the collection and use of personal data concerning children and personal data contained in education records, respectively, in the GenAI data lifecycle.244 However, FERPA’s failure to prohibit the publication of “directory information,” would allow some categories of personal information to become publicly available and subject to third party collection via data scraping.245 Neither the Gramm-Leach-Bliley Act, nor COPPR, nor FERPA provide special protections for sensitive personal data.246 HIPAA, on the other hand, governs only a subcategory of sensitive personal data through its regulation of PHI.247 Outside of exceptions for limited data sets, HIPAA would prohibit the use and disclosure of PHI for the purpose of GenAI development without the individual’s consent.248 For example, if a healthcare provider inputs PHI into a GenAI model without patient authorization, this disclosure would likely violate HIPAA.249 Notably, COPPR, FERPA, and HIPAA only govern the activities of specific actors in their respective industries, leaving the regulation of more general personal data processing to the FTC and state data privacy laws. For example, in most cases, HIPAA would not protect the privacy of PHI that individuals provide to GenAI models because the companies that own these models are not “covered entities” or “business associates” under HIPAA.250

The CCPA and VCDPA rely primarily on information obligations and the consumer’s ability to opt out of data sharing (or disclosure to third parties) to protect personal data.251 Both laws would require businesses to inform consumers of plans to collect and use personal information for GenAI purposes prior to data collection. Theoretically, consumers can either initially refrain from sharing personal data for GenAI purposes or subsequently limit the sharing of their personal data.252 In addition to information obligations, the VCDPA, but not the CCPA, would prohibit the collection and use of sensitive personal data for GenAI purposes unless the consumer provided consent.253

The GDPR does not distinguish between publicly available personal data and private personal data. As a result, the collection of personal data from an individual data subject, like the collection of publicly available data through data scraping, must also be supported by a valid legal basis. In March 2023, the Italian DPA banned ChatGPT because “OpenAI had no legal basis to justify ‘the mass collection and storage of personal data for the purpose of ‘training’ the algorithms underlying the operation of the platform.’”254 In April 2023, the ban was lifted after OpenAI responded with increased transparency about how the company processed user data and offered an opt-out option for users who did not want their conversations used to train ChatGPT.255 However, in January 2024, the Italian DPA launched new allegations that OpenAI is again violating the GDPR, though the details of this investigation have not yet been released.256

The GDPR does offer additional protections for special categories of data, also known as sensitive data. Processing such data in the GenAI data lifecycle is generally prohibited unless it falls under one of the Article 9 exceptions. Notably, none of these exceptions allow processing special categories of personal data for the entrance or performance of a contract or to advance legitimate interests.257 While special categories of personal data that are “manifestly made public by the data subject” are excepted from Article 9’s prohibition on processing, the EDPB’s ChatGPT taskforce warns that public availability itself is not enough; instead, the data subject must have “intended, explicitly and by a clear affirmative action, to make the personal data in question accessible to the general public” for this exception to be valid.258 As a result, processing special categories of personal data for the development and continued operation of GenAI will likely require explicit consent, requiring an “express statement of consent” from the data subject.259

C. Control Over Personal Data

It is challenging for individuals to control the flow of their personal data in the GenAI data lifecycle. The FTC warns that “[t]he marketplace for this information is opaque and once a company has collected it, consumers often have no idea who has it or what’s being done with it.”260 Although the EU and US both seek to provide individuals with some rights to control their personal data vis-à-vis rights to request access, correction, and deletion of their personal data, exercising these rights can be difficult, or impossible, in the context of the GenAI data lifecycle.

Once personal data enters the GenAI data lifecycle, individuals may not be able to effectively exercise their rights to correct or delete personal data under either US or EU data privacy laws. This is especially problematic because GenAI can produce incorrect information about a real individual. For example, ChatGPT generated a false but detailed accusation of sexual harassment by a real law professor, citing a Washington Post article that it hallucinated.261 It also falsely reported that an Australian mayor served a prison sentence for bribery.262 In these cases, the use of personal data for GenAI training led to defamatory statements about real identifiable individuals. In the US, the FTC filed an information request to OpenAI, which indicates concern that OpenAI “violated consumer protection laws, potentially putting personal data and reputations at risk” in connection with ChatGPT’s ability to “generate false, misleading, or disparaging statements about real individuals.”263 In Europe, the CEDPO also recognizes the danger of inaccurate GenAI outputs, noting that “[g]enerative AI systems must provide reliable and trustworthy outputs, especially about European citizens whose personal data and its accuracy is protected under the GDPR.”264 Recently, nyob, a non-profit organization that seeks to privately enforce GDPR violations, filed a complaint alleging that ChatCPT’s continuous inaccurate output concerning an individual’s date of birth violates the accuracy principle in Article 5(1)(d) of the GDPR.265 The complaint further alleges that OpenAI’s inability to prevent ChatGPT from hallucinating inaccurate personal data or to erase or rectify the inaccurate data also constitutes a violation of Article 5(1)(d).266 Nyob’s complaint against OpenAI requests, among other things, that the Austrian DPA investigate and fine OpenAI for these alleged data privacy violations.267 The EDPB ChatGPT taskforce notes that although OpenAI might warn users that ChatGPT can produce inaccurate data to satisfy the GDPR’s transparency principle, this does not relieve OpenAI of its obligation to comply with the GDPR’s data accuracy principle.268

Despite privacy rights designed to protect the use and accuracy of personal data in both the US and EU, there are several reasons why individuals’ requests to delete or correct their personal data might be futile. First, data scraped from the internet will be retained in the resulting dataset even if it is later removed from an online public forum. As a result, “even if individuals decide to delete their information from a social media account, data scrapers will likely continue using and sharing information they have already scraped, limiting individuals’ control over their online presence and reputation.”269 Second, once personal data is used to develop a GenAI model, it can be difficult to extract specific data from the model to remove it after-the-fact.270 Third, individuals may not be able to provide sufficient proof that their personal data is being used in the GenAI data lifecycle. For example, some users who submitted data deletion requests report that Meta provides a boilerplate response claiming that “it is ‘unable to process the request’ until the requester submits evidence that their personal information appears in responses from Meta’s generative AI.”271 Similarly, OpenAI and Midjourney failed to respond to an individual’s request to have her image deleted.272

Conclusion

The flow of personal data through the GenAI data lifecycle introduces new challenges for data privacy. This Article provides interdisciplinary insight into the role and legal implications of personal data in the modern GenAI data lifecycle and examines the resilience of data privacy frameworks in the US and EU in light of these implications. In Part I, we described the architecture behind modern GenAI models. Part II identified the relevant data privacy frameworks in the US and EU. We dissected and compared the US’s fragmented approach and the EU’s comprehensive approach to data privacy to reveal that while both jurisdictions offer some data privacy protections through a combination of information obligations and use restrictions, as a default, the US allows personal data processing unless specifically prohibited, while the EU prohibits personal data processing unless specifically allowed. In Part III, we described how personal data is stored inside GenAI models—commonly without express consent—and present at every stage of the data lifecycle in GenAI. We explained how personal data, including publicly available personal data as well as private and sensitive personal data, come from a variety of sources and how they are used to train, operate, and improve GenAI models.

Part IV identified several implications that the flow of personal data through the GenAI data lifecycle has on data privacy and explored whether the US and EU’s data privacy frameworks are equipped to deal with these new data privacy concerns. We first explained how the widespread disclosure of publicly available personal data might violate individuals’ expectations of privacy. We concluded that in the US, the data privacy framework offers little protection to publicly available data collected through data scraping and used to develop GenAI models. On the other hand, the EU’s GDPR does not distinguish between private and publicly available data and, as such, offers more protection. Next, we discussed how GenAI models could collect and disclose private and sensitive personal data. Both the US and EU protect private and sensitive personal data in the GenAI data lifecycle by regulating disclosure of such data; however, the US’ piecemeal approach to data privacy regulation still leaves gaps, particularly in cases where individuals did not authorize and are not aware that their personal data are being processed in the GenAI data lifecycle. Finally, although both jurisdictions seek to provide individuals with rights to control the accuracy of and access to their personal data, we highlight how GenAI’s ability to produce inaccurate data about individuals or retain personal data indefinitely may not be remedied by individuals’ rights to request, correct, and delete their information under US and EU data privacy laws.

As one of the latest applications of data-hungry technology, GenAI introduces new concerns about data privacy. These concerns are already on the radar of regulators in the US and EU and can already be managed to some extent by the data privacy frameworks in place; however, both jurisdictions should pay special attention to the unprecedented and sweeping collection and use of personal data from public sources that underpin GenAI models, particularly because many individuals may not even be aware of how their personal data is used in the GenAI data lifecycle nor have ever explicitly agreed, either individually or collectively, to such processing in the first place.

Acknowledgements

Mindy Duffourc and Sara Gerke’s work was funded by the European Union (Grant Agreement no. 101057321). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the Health and Digital Executive Agency. Neither the European Union nor the granting authority can be held responsible for them.Appendix

Appendix A. Summary Comparison of Data Types in General Data Protection Laws in the US and EU

| FTC Act (US) (based on FTC policy documents) | CCPA (California) | VDPA (Virginia) | GDPR (EU) | |

|---|---|---|---|---|

| Personal |

|

|

|

|

| Sensitive |

|

|

|

|

| De-identified/ Pseudonymized |

|

|

|

|

| Anonymous |

|

|

|

|

| Aggregate |

|

|

|

|

| Publicly Available |

|

|

|

|

Appendix B. Summary Comparison of Data Types in US Sector-Specific Laws

| The Gramm-Leach-Bliley Act | COPPA | FERPA | HIPAA | |

|---|---|---|---|---|

| Main Type of Personal Data Governed |

|

|

|

|

| De-identified/ Pseudonymized |

|

|

|

|

| Anonymous |

|

|

|

|

| Aggregate |

|

|

|

|

| Publicly Available |

|

|

|

|